VIS/NIR IMAGING IN LONG DISTANCE AND VIS/NIR CAMERAS

VIS/NIR refers to cameras that use visible light (VIS 400-700nm) and near-infrared (NIR 700-1100nm) wavelengths to produce color images during daylight and black-and-white images in low-light conditions at night. Visible light cameras utilize the same spectrum perceived by the human eye, capturing and processing light in red, green, and blue wavelengths (RGB) for accurate color representation. Modern cameras have the ability to create images at various resolutions to resolve details, and like the human eye, visible light cameras also rely on light. Their imaging performance is often significantly reduced due to atmospheric conditions such as fog, haze, smoke, and heat waves. This limits the applications to daytime and clear sky.

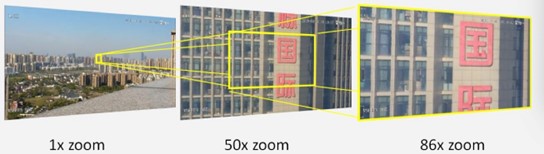

In cameras that perform zoom-in operation, there are two modes: optical zoom and digital zoom. While optical zoom changes the angle of vision by moving the lens assembly inside the lens. Optical zoom adjusts the field of view by moving lens elements inside the camera’s objective, whereas digital zoom captures and enlarges a portion of the image using a software algorithm. Optical zoom maintains the spatial resolution of the imaging system, while digital zoom reduces spatial resolution.

VIS CAMERAS: BASIS OF LONG DISTANCE IMAGING

There are lots of variables affecting the image quality of long-range cameras. The physical components of the camera should be primarily inspected for long-range imaging. Starting from the lens, one should examine how much the image is magnified, how much light passes through the lens, and how accurately and sharply the lens focuses this image.

The lens used determines the main difference among the cameras with the same sensor. The resolution, sensitivity, sensor size and image creating algorithms of the image sensor forms the main differences of the systems. Other important parameters in long-range imaging are environmental parameters such as air temperature and pressure. The air you look through consists of molecules that disrupt vision by hindering light transmission, even under the best conditions. Humidity, rain, snow, dust, and fog lower the image contrast and Nem, yağmur, kar, toz ve sis görüntü kontrastını azaltır ve atmospheric turbulence caused by temperature changes at the observed distance leads to distortions in the image.

In order to compare the long-range imaging systems, their resolution values are used effectively. The common standard for resolution is Pixel Per Meter (PPM). PPM defines the amount of defined pixels across 1 m of width, a certain distance from the camera. It is the simplest way to measure the long-range zoom capabilities of the camera and allows for easy comparison between different types of cameras.

Due to differences in measurements from visible and thermal sensors, PPM calculations are computed differently for each technology. For visible/NIR sensors, PPM is calculated using Field of View and Sensor Resolution. Longer focal length lenses or smaller sensors produce narrower fields of view, whereas shorter focal lengths or larger sensors yield wider fields of view.

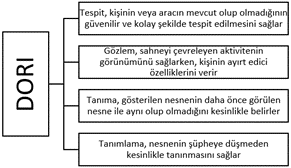

Another standard that resembles DRI standard but has different definitions is used in color surveillance cameras (VIS/NIR). This standard is DORI which means Detection, Observation, Recognition, Identification. While two pixels on a screen may be sufficient to count as “detection” in thermal imaging, using this level of detail to detect a target in a visible imaging system would be nearly impossible if the exact location to look at is unknown. DORI is an industry standard that defines the level of detail required in PPM to achieve detection, observation, recognition, and identification distances for visible light surveillance cameras. For visible light surveillance cameras, the International DORI Standard, specified in IEC EN62676-4: 2015, defines Detection (25PPM), Observation (62PPM), Recognition (125PPM), and Identification (250PPM) detail levels.

DRI/DORI measurements generally disregard any kind of atmospheric condition. Environmental factors are almost never ideal, because of this the distances in real use are almost always shorter than what is stated.

ADVANTAGES AND DISADVANTAGES OF VIS/NIR IMAGING

| DRI (THERMAL) | DORI (VIS/NIR) | |

| Human Detection | 1-3 PPM | 25 PPM |

| Human Recognition | 3-7 PPM | 125 PPM |

| Human Identification | 6-14 PPM | 250 PPM |

| Vehicle Detection | 1 PPM | 25 PPM |

| Vehicle Recognition | 2-3 PPM | 125 PPM |

| Vehicle Identification | 5-6 PPM | 250 PPM |

Presently, the two types of imaging systems that stand out are thermal and VIS/NIR imaging systems. These two technologies differ not only in the images they produce but also in how these images can be beneficial for surveillance. The fundamental difference between the two imaging systems lies in the source of the light being imaged. In thermal imaging, the source of light is the electromagnetic waves emitted by the object being imaged, whereas in VIS/NIR imaging, the light originates from external sources and is reflected by the object. Thermal imaging excels in detecting threats or targets but falls short in creating detailed images of these targets. VIS/NIR imaging, while more challenging for threat detection, can provide excellent detail for identifying long-range targets.

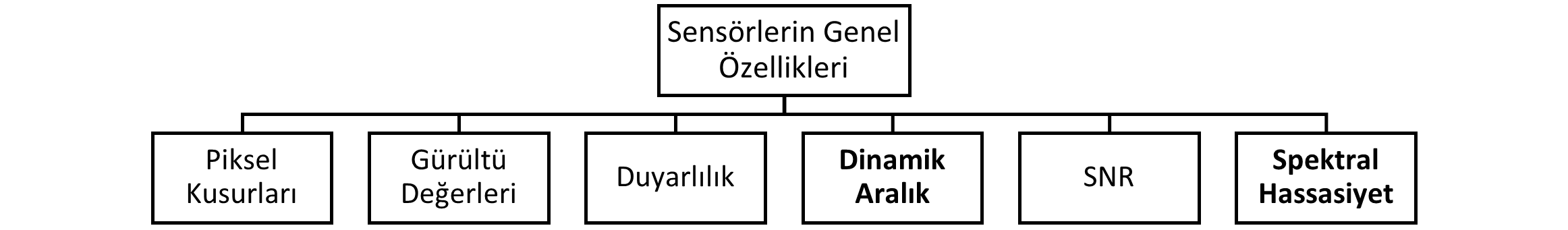

TYPES OF VIS DETECTORS

There are two main sensor technologies used in VIS/NIR long-range imaging: CCD and CMOS. Since CCD is a passive-pixel device (without electronics in pixel level) its quantum kuantum efficiency is very high. This is an advantage in applications where light is rather weak. On the other hand, low frame rate in CCDs creates problem with some applications. CMOS are sensors based on active-pixel array. The output of each pixel is obtained and sampled one by one. CMOS sensors achieve higher frame rates and enable region of interest (ROI) definition more easily due to their simpler readout scheme. However, they suffer from higher noise levels due to the readout transistors associated with each pixel.

In VIS/NIR sensors, the shutter refers to the manner in which an image is captured and read out.

In a rolling shutter, the exposure time is the same for all pixels, but there is a delay between exposures. A rolling shutter captures an image that is slightly shifted in time rather than all at once. This can pose challenges for applications requiring high frame rates.

In a global shutter, the exposure time for all pixels starts and ends simultaneously. This means that the information provided by each pixel represents the same time interval during which the image was captured. This type of shutter is essential for fast applications.

While CCD sensors only have the option of global shutter, CMOS sensors can employ both rolling and global shutter modes.

Dynamic range is the ratio between the maximum and minimum signals acquired by the sensor. At the upper limit, pixels appear white for high intensity values (saturation), while below the lower limit, pixels appear black. Dynamic range is typically expressed logarithmically as the ratio of minimum to maximum, often in decibels on base 10, or stops on base 2. For instance, the human eye can distinguish objects under both starlight and bright sunlight conditions, corresponding to a density difference of 90 dB. However, this range cannot be used simultaneously because the eye needs time to adapt to different lighting conditions. Some CMOS sensors have approximately 23,000:1 (14.5 stops, 43 dB) dynamic range.

The spectral sensitivities of CCD or CMOS cameras typically range from 350 to 950 nm, with the peak region usually between 400 and 650 nm.

PROBLEMS IN LONG-RANGE IMAGING AND THEIR SOLUTIONS

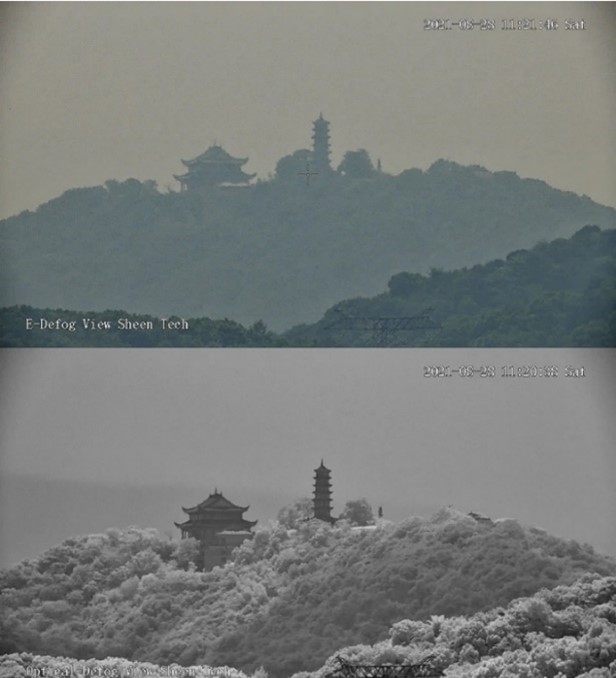

Fog is an important factor in long-range imaging. Two methods are used to lower the effects of fog in VIS/NIR cameras: Optical and digital defogging. Optical Defog uses the penetration feature of different wavelenghts. This is the physical principle applied by optical fog detection to obtain a clear image of the target object in foggy or misty conditions; the system uses a filter that passes long wavelengths to activate this feature. Digital (electronic) Defog technique, on the other hand, the image is processed secondarily by an algorithm that emphasizes specific object features of interest while suppressing irrelevant ones. Especially when long distances are in question, optical defog gains the upperhand over electronic defog.

Image Stabilization (IS) refers to how stable the optical system within a camera is during image capture. There are many reasons for blurry images such as low light conditions, long focal lengths, camera shake, and slow shutter speeds. A camera equipped with Optical Image Stabilization (OIS) stabilizes using an internal motor that physically moves one or more elements within the lens when the camera moves. A camera with optical image stabilization can produce clearer images in low light conditions compared to those without. Electronic Image Stabilization (EIS) is achieved through software. To stabilize a shaky video, the camera crops out non-moving parts in each frame. The more zoom is used in EIS, the lower the final image quality becomes.

Heat Haze effect occurs due to changes in air temperature causing volumetric changes and thus density variations. Light passing through turbulent air undergoes numerous irregular refractions. Heat haze reduction ensures clear image capture by optimizing both the lens’s optical design and the imaging algorithm to counteract temperature-induced fluctuations.

FEATURES OF LONG-RANGE VIS/NIR LENSES

Intended Uses of Long-Range VIS/NIR Imaging

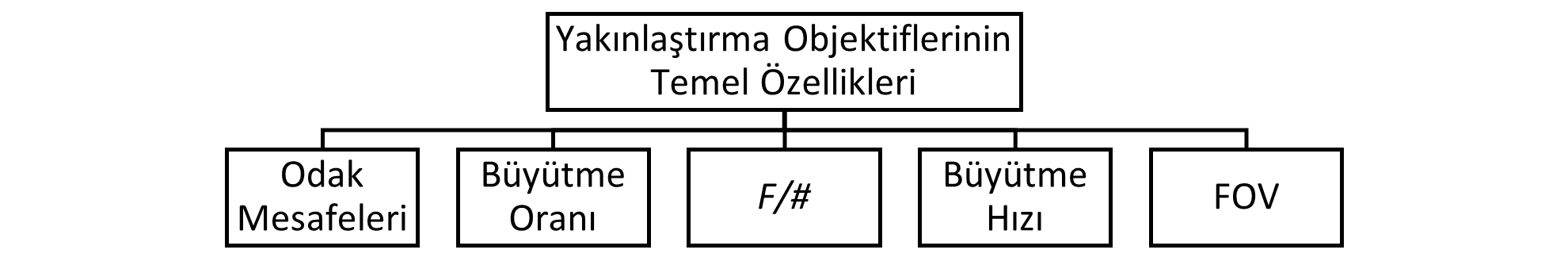

| VIS/NIR Camera Key Parameters

|